How to Add ChatGPT to Your Meshtastic Network

Bring ChatGPT AI to your Meshtastic mesh network with a simple Python bot. One internet connected node serves the whole mesh, enabling instant answers for survival, field ops, education, and more showcasing just how flexible and extensible Meshtastic can be.

A few months back, I shared my experiments with sending voice messages over Meshtastic networks. That project got a lot of attention and showed me just how eager the community is to push these mesh devices beyond their traditional boundaries. Today, I'm sharing something new: a complete AI bot system that brings GPT-powered responses directly to your mesh network.

What We're Building

This AI bot turns any Meshtastic node with internet access into an intelligent hub for your entire mesh network. Anyone on the network can ask questions by sending messages with a command prefix (like "!"), and the bot responds with AI-generated answers. Think of it as giving your mesh network a brain.

The use cases are pretty compelling:

- Emergency situations where you need instant medical or survival advice

- Remote expeditions where identifying plants, weather patterns, or troubleshooting equipment matters

- Technical support for field operations

- Educational applications for outdoor groups

- General knowledge access when you're off-grid but still connected to your mesh

Unlike other AI implementations I've seen that use complex MQTT routing or try to run models offline (which requires serious hardware), this approach is refreshingly simple: one node with internet access serves the entire mesh.

Understanding the Architecture

The system works on a hub-and-spoke model:

The Hub Node (AI Bot):

- Connected to both the mesh network and the internet

- Runs Python application with GUI interface

- Processes incoming commands and queries OpenAI's API

- Manages response length to fit Meshtastic's limitations

- Broadcasts answers back to the mesh

Mesh Network Participants:

- Send queries using command prefix (default: "!")

- Receive AI responses as regular text messages

- No special software or configuration required

- Works with any Meshtastic device

The beauty is that only one node needs internet access and the bot software. Everyone else just uses their devices normally.

Prerequisites and Requirements

Before we dive into the build, here's what you'll need:

Hardware:

- At least one Meshtastic device (T-Beam, Heltec, LilyGo, etc.)

- USB cable for device connection

- Computer with Windows, Mac, or Linux

- Internet connection for the bot node

Software:

- Python 3.7 or newer

- OpenAI API key (paid account recommended for reliability)

- Required Python libraries (detailed below)

Network Setup:

- Functioning Meshtastic mesh network

- At least one other device to test with

Installation and Setup

Let's get this thing running. I'll walk you through every step.

Step 1: Clone the Repository

git clone https://github.com/TelemetryHarbor/meshtastic-ai-bot.git

cd meshtastic-ai-bot

Step 2: Install Python Dependencies

The bot requires several Python packages. Install them with:

pip install -r requirements.txt

If you're on Linux, you might need to install tkinter separately:

sudo apt-get install python3-tk

Step 3: Hardware Connection

Connect your Meshtastic device to your computer via USB. Make sure it's properly configured and connected to your mesh network. You can verify this using the Meshtastic CLI or mobile app before proceeding.

Step 4: Get Your OpenAI API Key

Head to OpenAI's website and create an account if you don't have one. You'll need to:

- Go to the API section

- Create a new API key

- Add some credits to your account (the bot uses GPT-4o-mini, which is very cheap)

Keep this API key handy - you'll need it in the next step.

Step 5: Launch the Application

Run the bot with:

python meshtastic_ai_bot.py

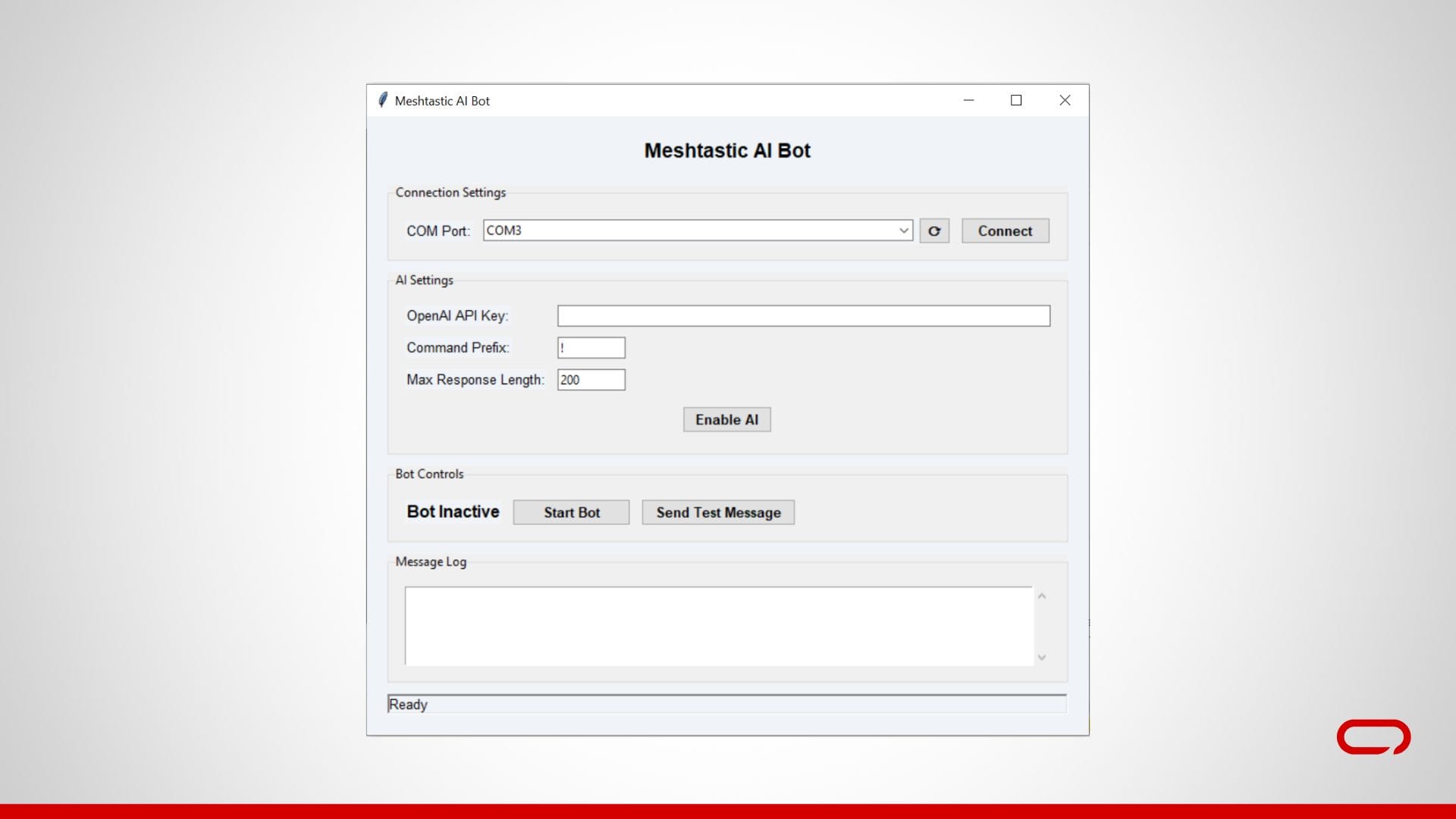

You should see a GUI window with several sections for configuration.

Configuration Walkthrough

The interface is organized into logical sections. Let me walk you through each one:

Connection Settings

COM Port Selection: Click the refresh button (⟳) to scan for available ports. Your Meshtastic device should appear in the dropdown. Select it and click "Connect."

The log will show connection status:

[2025-01-15 10:30:15] Connecting to Meshtastic device on COM3...

[2025-01-15 10:30:16] Connected to Meshtastic device successfully

[2025-01-15 10:30:16] Connected to node: 123456789

AI Settings

OpenAI API Key: Paste your API key here. The field is masked for security, but the key is stored in memory while the app runs.

Command Prefix: Set the character that triggers AI responses. Default is "!" but you can use any character. For example:

- "!When is the sunset?"

- "?How do I treat a sprain?"

- "@Tell me about local wildlife"

Max Response Length: Meshtastic has a ~240 character limit for text messages. I recommend keeping this at 200 to be safe. The bot automatically truncates longer responses.

Once configured, click "Enable AI" to activate the OpenAI connection.

Bot Controls

Bot Status: Shows whether the bot is actively listening for commands.

Start/Stop Bot: Once connected to both Meshtastic and OpenAI, click "Start Bot" to begin listening for commands on the mesh network.

Send Test Message: Useful for verifying your setup works before going live.

How to Use the Bot

Once everything is configured and running, using the bot is straightforward:

For Bot Operators

- Ensure your device is connected and the bot shows "Active"

- Monitor the log for incoming queries and responses

- The system handles everything automatically

- You can stop/start the bot as needed

For Mesh Network Users

Send any message starting with your configured prefix:

!How do I start a fire in wet conditions?

!What's 150 kilometers in miles?

!Identify symptoms of altitude sickness

!Best way to purify water from streams?

The AI will respond with concise, relevant answers:

AI: Start fire in wet conditions: 1) Find dry tinder inside dead branches 2) Build platform of dry logs 3) Use birch bark/fatwood 4) Create windbreak 5) Start small, build gradually. Carry dry tinder in waterproof container.

Understanding the Technical Implementation

Let me break down how this actually works under the hood.

Message Processing Flow

- Incoming Message Detection: The bot subscribes to Meshtastic's message events using the pubsub library. Every text message gets processed through the

on_receivemethod. - Command Recognition: Messages are checked for the command prefix. If found, the prefix is stripped and the remaining text becomes the query.

- Response Formatting: The AI response is truncated if necessary and sent back to the mesh network using

interface.sendText(). - Broadcast Distribution: Meshtastic handles distributing the response to all nodes in the network.

AI Processing: The query gets sent to OpenAI with a system prompt that emphasizes brevity:

system_prompt = f"""You are a helpful assistant responding via Meshtastic radio network.

Your response MUST be under {self.max_response_length} characters. Be concise and helpful.

If the query requires a long answer, provide the most important information first."""

Error Handling

The system includes several layers of error handling:

- Connection failures: Bot stops gracefully if Meshtastic disconnects

- API errors: Sends error messages back to the network

- Rate limiting: Built-in delays prevent API abuse

- Message deduplication: Prevents processing the same message twice

Performance Considerations

API Costs: GPT-4o-mini is very affordable. Typical queries cost fractions of a penny. Even heavy usage should cost under $5/month.

Response Time: Most queries complete in 2-5 seconds, depending on internet connection and API response time.

Network Impact: Each AI response is a single text message, so network impact is minimal compared to my voice messaging experiments.

Advanced Configuration

Customizing Response Behavior

You can modify the system prompt in the code to change how the AI responds:

system_prompt = f"""You are a survival expert responding via radio.

Responses must be under {self.max_response_length} characters.

Prioritize safety and practical advice."""

Logging and Monitoring

The application logs all activity to the GUI and can be extended to log to files:

[2025-01-15 10:35:22] Received message from 987654321: !How to treat burns?

[2025-01-15 10:35:24] AI Response (156 chars): For burns: 1) Cool with water 10-20 mins 2) Remove jewelry/clothing 3) Don't use ice/butter 4) Cover with clean cloth 5) Seek help for severe burns

[2025-01-15 10:35:25] Message sent successfully

Troubleshooting Common Issues

"No COM ports found"

- Check USB connection

- Install Meshtastic device drivers

- Try different USB cable/port

- Verify device is powered on

"Failed to connect to OpenAI API"

- Verify API key is correct

- Check internet connection

- Ensure API account has credits

- Try regenerating API key

"Messages not being received"

- Verify mesh network connectivity

- Check if other devices can communicate

- Ensure bot is actually started (not just connected)

- Try sending test message first

"Responses too long"

- Reduce max response length setting

- AI sometimes ignores length constraints with complex queries

- Consider splitting complex questions into parts

Limitations and Considerations

Let's be realistic about what this system can and can't do:

Network Dependencies:

- Bot node requires internet access

- If internet fails, AI functionality stops

- Mesh network itself continues working normally

- For now it works only on the public channel

Response Quality:

- Limited to ~200 characters means simple answers only

- No memory implemented yet, so follow up questions won't work. (Open PR if you like to contrbute)

- AI can occasionally give incorrect information

Performance:

- Response time depends on internet speed

- API rate limits could cause delays with heavy usage

Cost:

- Requires paid OpenAI account

- Usage costs scale with query volume

- Monitor API usage to avoid surprises

Real-World Applications

Hiking Groups: Hikers can ask about trail conditions, weather, first aid, or plant identification without leaving the mesh network.

Emergency Response: During training exercises, teams use the bot for quick protocol lookups, medication dosages, or technical procedures without breaking radio discipline.

Educational Expeditions: Students can ask questions about geology, biology, or local history while maintaining communication through Meshtastic devices.

Technical Field Work: Engineers and technicians get instant access to specifications, troubleshooting steps, or calculation tools while working in remote locations.

Future Enhancements

This is just the beginning. Here are some improvements we can work on:

Multi-Model Support: Add support for different AI models or specialized APIs and tool calling capability (weather, identification, etc.)

Message Threading (History): Allow follow-up questions that reference previous context.

Response Caching: Store common questions locally to reduce API calls and improve response time.

Priority Queuing: Handle emergency queries faster than general questions.

Integration Improvements: Better integration with Meshtastic's node management and routing features. Maybe in app support too like MQTT?

Conclusion

This project is a nice step toward bringing AI into the Meshtastic ecosystem, bridging the gap much like the MQTT feature already does. It also shows how mod-friendly and extensible the network really is opening the door for other ideas like weather data relays, translation bots, offline knowledge packs, or even local ML models for signal analysis. Features like MQTT and AI are just the beginning, and they hint at how much more we can bring into the mesh in the future.

Comments ()