How to Send Audio with Meshtastic

Ever wondered if you could send voice notes over Meshtastic's low-bandwidth network? I certainly did, and I'm excited to share my weekend adventure trying to accomplish just that.

So a couple days ago I was having a chat with a friend about how Meshtastic works. He casually asked, "Well, why can't you send audio?" I explained that bandwidth simply isn't enough since it operates on low-energy sub-GHz frequencies. These frequencies give us amazing range but come with serious data limitations - we're talking kilobytes per minute, not the megabytes per second we're used to with modern communications.

Fast forward a bit, and I noticed something interesting in the Meshtastic docs - a mode preset for LoRa called SHORT_TURBO (described as "Fastest, highest bandwidth, lowest airtime, shortest range. It is not legal to use in all regions due to its 500kHz bandwidth.").

This got me wondering: if we can send text super fast (though "fast" in LoRa terms is still not that fast), why can't we send audio or voice notes?

Previous Discussions with Devs

We had a talk about audio transmission about a year ago with the Meshtastic developers, and understandably, they weren't fans of the idea. Imagine holding the frequency for 30-60 seconds just to send a voice message - it would bring the whole network down and make it unreliable, especially considering how the Meshtastic community is growing. That could really hurt performance for many users.

The devs pointed out that LoRa was designed for small, infrequent data packets - not streaming media. A standard Meshtastic configuration might only allow for 5-20 bytes per second depending on settings and conditions. Compare that to the roughly 8 kilobytes per second needed for even the most compressed voice audio, and you can see the problem.

But at the same time, I really wanted to test it. I just wanted to see if we could actually do it. Maybe someone would think of a nice way to optimize it for production eventually.

Sponsor: Telemetry Harbor

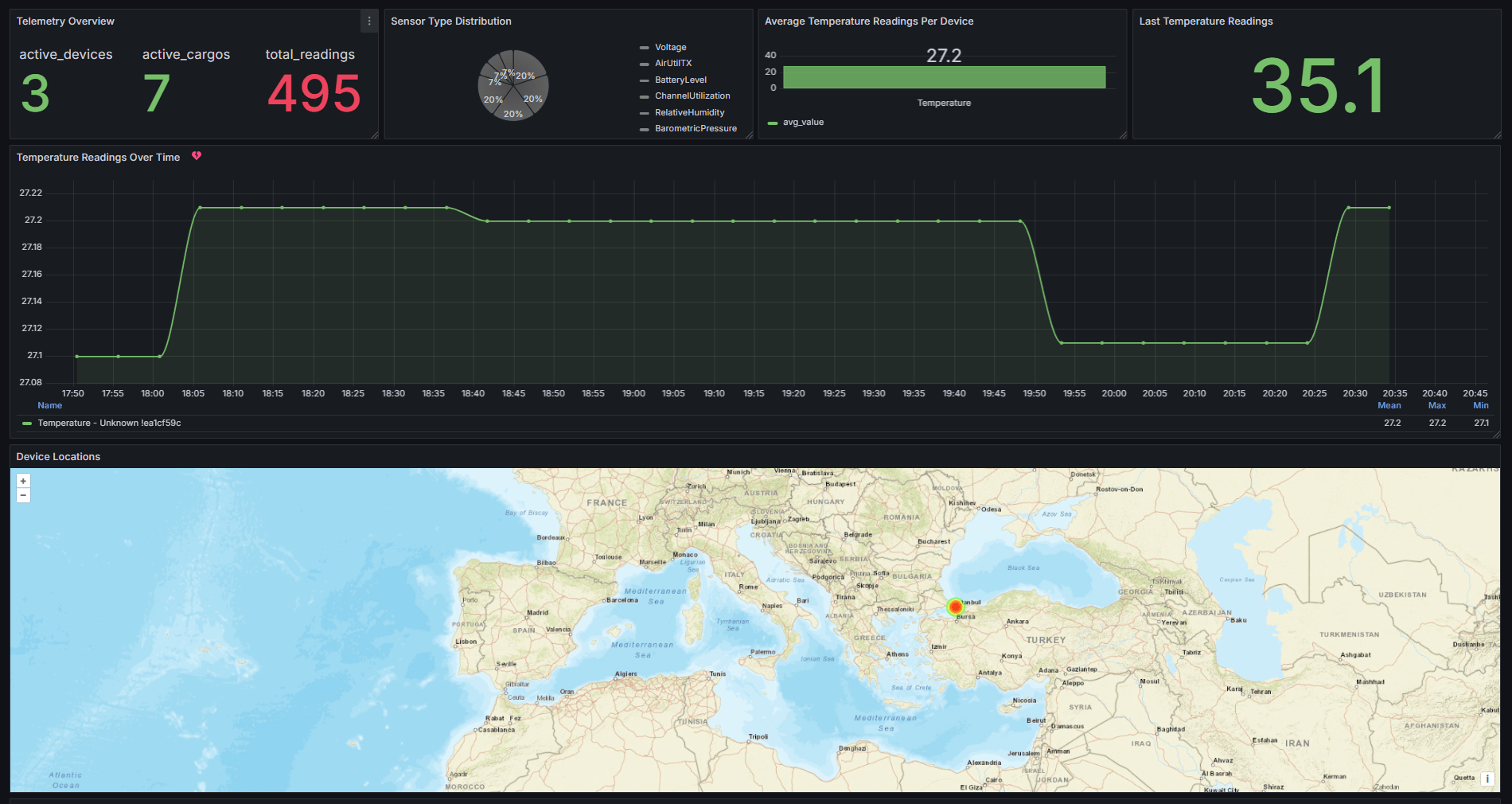

Before we continue, let's give a shoutout to our sponsor for this blog: Telemetry Harbor.

Telemetry Harbor is an all-in-one solution to Collect, Store & Visualize Your IoT Data — All in One Place. It's really easy - create an account, and then it's just a matter of a few HTTPS requests to see your data in Grafana. Super simple, super fast time to first data point.

Along with Harbor AI to talk to your data, it's like having a personal assistant that knows everything about your metrics. They have lots of integrations, including a Meshtastic integration where you can monitor your network and node telemetry.

The Initial Plan

With this challenge in mind, I started thinking about approaches. I obviously didn't want to build my own firmware and app from scratch - that would definitely grow beyond a weekend project.

So my idea was: if we can send messages, what if we convert audio to text, send the text, then convert it back to audio? On paper, it seemed doable.

Being the vibe coder I am, I fired up my $20 LLM subscription and got to work. I asked for Python code to interact with the Meshtastic library to record a voice note, convert it to text, and send it, with plans for the receiving node to convert it back to audio.

Meshtastic Library Limitations

Immediately after implementing the solution, I got an error: the message is more than 256 bytes. This was expected - it's a limitation clearly shown by the iOS app and everywhere else that you can't send long messages.

The error came from the Python library's validation checks:

if len(encoded_message) > 256:

raise Exception(f"Message exceeds maximum size of 256 bytes (was {len(encoded_message)} bytes)")I thought, "OK, maybe if I can overcome the library limitation, maybe the device will send it." So I dug through the library code and removed the validation check. I recorded a voice sample, sent it, and the logs said "sent successfully." But wait - nothing was received on the other side. It seems that the device firmware also has a check in place.

Custom Firmware Attempt

After a bit more digging, I found that the firmware has a Protocol Buffer constant that defines the message length. The protobuf definition in the firmware limited the message size:

/*

* From mesh.options

* note: this payload length is ONLY the bytes that are sent inside of the Data protobuf (excluding protobuf overhead). The 16 byte header is

* outside of this envelope

*/

DATA_PAYLOAD_LEN = 233;So I did what any logical person would do - cloned the repo, edited the DATA_PAYLOAD_LEN value to 150000, recompiled the firmware, flashed two devices, and tried to send another message.

What happened? Nothing - same result. The logs showed "message sent successfully," but nothing appeared on the receiving side. After more investigation, I found that there are multiple layers of validation throughout the stack.

Dead Simple

So I gave up on trying to overcome Meshtastic's fundamental limits. First, I didn't have much time, and also it would be hard to document this process in a blog and tell people to test it themselves.

I thought, "OK, let's convert the voice note to text, chunk it so it doesn't exceed the limit, and send it in parts with some metadata so we can reassemble it on the other side." With help from my $20 LLM, I set up some predefined chunk sizes and compression levels.

The technical implementation looked something like this:

- Record audio using PyAudio at 8kHz, mono, 16-bit PCM

- Compress the audio using different compression algorithms:

- "Very Low" quality: Extreme MP3 compression at 8kbps

- "Low" quality: MP3 compression at 16kbps

- "Medium" quality: MP3 compression at 32kbps

- "High" quality: MP3 compression at 64kbps

- Split the compressed data into chunks with metadata headers:

- Small chunks: 150 bytes of payload data per message

- Medium chunks: 200 bytes of payload data per message

- Large chunks: 230 bytes of payload data per message

- Send each chunk as a separate message

- On the receiving end, collect chunks and reassemble when all are received

- Decompress and play back the audio

Add sequence headers to each chunk:

VOICE|[total_chunks]|[chunk_number]|[compression_level]|[payload]Testing the Chunking Approach

I recorded a voice note with the maximum compression ("Very Low" quality) and medium chunk size. The system started sending "1/27 chunks" and kept going. And what do you know? On the other side, I started receiving chunks! After a long while, the voice note indicator popped up on the receiver.

When I tried to play it, though, it blew my ears out - lots of noise. The compression was too aggressive, causing significant audio artifacts. So I stepped down the compression a bit to "Low" quality and tried another recording. This time it was something like 200 chunks, so it took a good minute or two to transmit. But then it appeared on the other side, and when I clicked play... the voice played clearly! It worked! I could actually send audio through Meshtastic.

The technical breakdown of what worked best:

- "Very Low" compression quality (8kbps MP3)

- "Small" chunks (150 bytes)

- 1-2 second voice messages resulted in about 50-60 chunks

- Total transmission time: approximately 30-120 seconds

The Potential

This experiment made me think about disaster situations where you might need more than just text. Technically, you could send not just voice but anything, as evident from other projects like the Reticulum network where you can send files, images, etc.

Now, this isn't well-supported by the iOS app, but hey, it's possible! It might saturate the network, but the bottom line is: we can do it. We just have to find a clever way of doing it while keeping the Meshtastic network fair for everyone.

Limitations

The code is not by any means stable. Sometimes with large chunk numbers like 400, it would start sending but midway through, the receiving node would stop getting chunks. I tried waiting for acknowledgments, but that would take ages to send anything.

Here are the key technical limitations I encountered:

- Reliability Issues: With no robust acknowledgment system, chunks often got lost

- Network Congestion: Sending multiple chunks in rapid succession would flood the network

- Time Constraints: A 10-second audio clip could take 1-2 minutes to transmit

- Battery Impact: Continuous transmission dramatically reduced device battery life

- Audio Quality: Even at "Low" quality, audio was noticeably compressed

- Range Reduction: In "SHORT_TURBO" mode, range was significantly reduced

Maybe something like what's used in FTP or HTTPS could be implemented to continue the download where it left off. Again, I'm pushing the limits of what Meshtastic is designed for.

But - and this is a big BUT - maybe if the message limit was higher, we could send audio faster since it would be one stable transmission rather than chunks with overhead, acknowledgments, etc.

Another thing to consider is that audio data is pretty big for LoRa. So forget about anything more than 5-10 seconds of decent quality. Would that even be worth it? You can get more bandwidth with lower range, but then why not just use UHF or VHF radio?

Want to Try It Yourself?

If you're adventurous and want to experiment with voice messaging over Meshtastic, I've made the proof-of-concept application available on GitHub:

Important: This is strictly a proof of concept and not intended for production use. The transmission is unreliable and serves primarily to demonstrate the possibility.

Features of the Meshtastic Voice Messenger:

- Record voice messages of configurable length

- Compress audio using different quality settings

- Split large messages into chunks for transmission

- Reassemble received chunks into complete audio messages

- Play received voice messages

- Send test messages to verify connectivity

- Detailed logging for debugging

Requirements:

- Python 3.7+

- Meshtastic-compatible device (e.g., T-Beam, Heltec, LilyGo)

- Required Python packages: meshtastic, pyaudio, numpy, tkinter

Quick Setup:

- Clone the repository:

git clone https://github.com/TelemetryHarbor/meshtastic-voice-messenger.git

cd meshtastic-voice-messenger- Install required packages:

pip install meshtastic pyaudio numpy- Connect your Meshtastic device via USB

- Run the application:

python app.pyRemember: Both the sender and receiver need to run the application for the voice messaging to work. Also, keep in mind that through extensive testing, I found that "Very Low" compression quality and "Small" chunk size (150 bytes) provide the best balance between audio quality and transmission reliability.

Conclusion

I was just curious and had a free weekend, so I wanted to try something cool. This could be the start for some plugins or new tools for Meshtastic. The potential is there, even if the practical application might be limited.

While this proof of concept demonstrates that voice transmission is technically possible, a production-ready implementation would require:

- More efficient compression algorithms

- Better error correction and recovery mechanisms

- Improved acknowledgment and retry logic

- Optimized bandwidth usage

- Integration with the Meshtastic protocol at a deeper level

Who knows what other capabilities we might discover as we keep pushing the boundaries of this fascinating technology? If you're interested in contributing to this experiment or have ideas for improvement, feel free to fork the repository and submit pull requests!

Comments ()